ROS interface

ROS (Robot Operating System) is an open source robotics middleware suite that is widely used in robotics software development. ROS provides client libraries for many programming languages (C++, Python, Java, …) which you can use to interact with Pickit from your own application.

Warning

Pickit provides a ROS 1 interface, but in practice it is less frequently used. Backwards compatibility is currently not guaranteed across Pickit versions.

ROS 2 is currently not supported by Pickit.

Connecting to the Pickit system

Overview

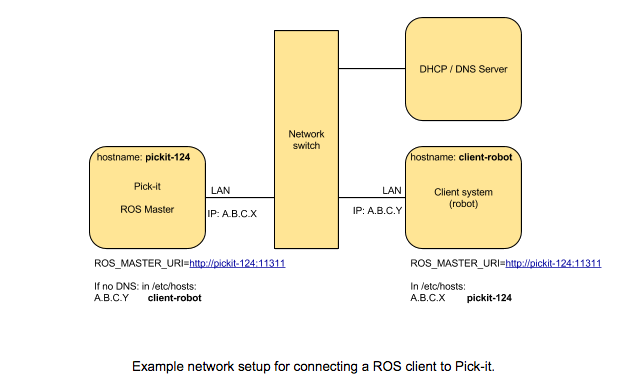

The Pickit system is running a ROS Master, which allows another system to connect to it using the ROS interfaces. Pickit exposes its ROS services and topics using standard ROS messages and a limited set of Pickit-specific ROS messages and services.

Note

Since Pickit’s ROS master is always running, it is recommended that the client system uses Pickit’s ROS master and does not start its own.

Connecting to Pickit ROS nodes requires you to define the Pickit hostname on the client system. Likewise, the client system’s name must be resolvable on the Pickit system. Preferably, the DNS Server of the client network resolves all hostnames to the LAN IP address of each host. If this is not possible, the host to IP mapping must be added to both Pickit’s and the client’s /etc/hosts.

The Pickit system is also running an ntp server. It can be used to make sure that the time between the client system and the Pickit system stays synchronised.

Connecting to Pickit

First verify that the Pickit system is reachable from your local system. Execute the following command in a terminal, replacing <pickit-pc> with the hostname of your Pickit system.

ping <pickit-pc>

The hostname of your Pickit system is shown on the top-left corner of the Pickit web interface, next to the Pickit logo. In the example below, it corresponds to pc-50000.

If the ping test fails, please check the network configuration for your local system and make sure that it’s in the same network as the Pickit system.

Set your local system to use the ROS master of the Pickit system and the ROS_IP environment variable to point to the IP of your local system.

export ROS_MASTER_URI=http://<pickit-pc>:11311/

export ROS_IP=<local-pc-ip>

After that, you can test your connection with the command below. If you see a stream of empty messages, then communication with the Pickit system has been established.

rostopic echo /pickit/heartbeat

To use ROS topics and services that come with Pickit-specific message types, you first need to source a ROS workspace containing the

im_pickit_msgs package. The package is available in our downloads page.

Refer to the catkin tools quickstart for details on how to build ROS packages and source ROS workspaces. Once the package has been built and its workspace has been sourced, run the following commands to verify connectivity with the Pickit system:

rostopic echo /pickit/calibrator/status

If you see the following message, make sure that your workspace is properly built and sourced.

ERROR: Cannot load message class for [im_pickit_msgs/PickitStatus]. Are your messages built?

ROS Services

ROS services are the recommended way to interact with the Pickit system. For testing these services from the command line, you can use the rosservice command-line tool.

Refer to the individual service files for a detailed explanation of the service request and response values. You can use rosservice info <service_name> to determine the type of an existing service.

System

Name |

Description |

|---|---|

|

Power off the Pickit processor. |

|

Reboot the Pickit processor. |

Detection

For performing detections, you can use the services listed below. If your camera is robot mounted, it is recommended to use separate capture_image and process_image calls such that the robot can immediately move after the image has been captured.

Name |

Description |

|---|---|

|

Request Pickit to find objects in the current scene. This command performs image capture and image processing in a single request. |

|

Request to capture a camera image, to be used by a following |

|

Request an object detection on the camera image that was previously captured via |

Configuration

Configuration services exists for both setup and product configuration. Replace <config_type> below with either setup or product.

Name |

Description |

|---|---|

|

Copy an existing configuration. |

|

Create a new configuration. |

|

Delete an existing configuration. |

|

Load an existing configuration. |

|

Reset unsaved changes of the currently active configuration. |

|

Save the currently active configuration. |

|

Save the currently active configuration under a different name. |

|

Switch the current active camera by specifying the camera’s serial number. |

Calibration

Robot-camera calibration can be performed using the services listed below. If you are only interested in object poses with respect to the ROI frame or camera frame, a robot-camera calibration is not necessary.

Name |

Description |

|---|---|

|

Configure the calibration process. This service should be called at the beginning of every calibration. |

|

Collect a calibration pose. It is expected that robot flange poses are periodically published on a dedicated ROS topic. |

|

Clear all previously captured calibration poses. This is also implicitly done when configuring the calibration. |

|

Calculate the calibration based on the collected input poses. |

|

Collect a new pose and validate the current calibration with that pose. |

Monitoring

Name |

Description |

|---|---|

|

Save a snapshot of the last detection. |

ROS Topics

For advanced applications, the provided ROS services may not be sufficient. In this case, you may also need to subscribe to some of the ROS topics listed below.

Name |

Description |

|---|---|

|

Raw calibrated point cloud of the currently active camera. |

|

Currently active <config_type> configuration. |

|

Point cloud used by Pickit for object detection. It only contains points belonging to the Region of Interest. |

|

List of available setup and product files. |

|

Boolean value indicating whether a detection is ongoing. |

|

List of detected objects given in the ROI frame and other relevant data. See the |

|

Same as |

|

Boolean indicating whether the robot is sending pose updates to the Pickit system. |

|

The transformation between the robot base and robot flange as sent by the robot. |

Example Python ROS node

The example code below shows how Pickit can be interfaced using the Python ROS client library. The program first loads the desired setup and product configuration and subsequently performs detections until no object can be detected anymore.

A more advanced example that demonstrates a pick and place application can be found here.

#!/usr/bin/env python

import rospy

from im_pickit_msgs.srv import CheckForObjects, LoadConfig, LoadConfigRequest

from im_pickit_msgs.msg import ObjectArray

if __name__ == "__main__":

rospy.init_node("pickit_client")

# Initialize ROS services.

rospy.wait_for_service("/pickit/configuration/product/load")

rospy.wait_for_service("/pickit/configuration/setup/load")

rospy.wait_for_service("/pickit/check_for_objects")

load_product_srv = rospy.ServiceProxy("/pickit/configuration/product/load", LoadConfig)

load_setup_srv = rospy.ServiceProxy("/pickit/configuration/setup/load", LoadConfig)

detect_srv = rospy.ServiceProxy("/pickit/check_for_objects", CheckForObjects)

# Load initial configuration by their product & setup ID (integer number).

# You can also load the files by their name (string), not shown in this

# example.

response = load_product_srv(method=LoadConfigRequest.METHOD_LOAD_BY_CONFIG_ID,

config_id=1, set_persistent=True)

assert response.success, "Failed to load product config."

response = load_setup_srv(method=LoadConfigRequest.METHOD_LOAD_BY_CONFIG_ID,

config_id=1, set_persistent=True)

assert response.success, "Failed to load setup config."

# Move robot to the detection point.

# ...

# Trigger first object detection.

response = detect_srv()

# Continuously detect objects and move a robot arm to the first detected object pose.

while response.objects.status == ObjectArray.STATUS_SUCCESS and response.objects.n_valid_objects > 0:

rospy.loginfo("Detected {} objects".format(response.objects.n_valid_objects))

first_object = response.objects.objects[0]

# Note that object poses are expressed in the pickit/reference frame.

# You can use tf or one of the transforms in the service response to

# transform them to your desired frame.

object_pick_pose = first_object.object_pick_tf

# Move robot to detection, then move back to the detection point.

# ...

# Trigger next detection.

response = detect_srv()

TF tree

Pickit uses tf to broadcast transforms between individual frames. The most important frames are listed below.

Name |

Description |

|---|---|

|

Frame in which the ROI box is expressed. Object poses are expressed in this frame when using the ROS services |

|

Frame corresponding to the base of the robot. |

|

Frame corresponding to the robot flange. Note that in most cases this is not the robot’s TCP frame. |

|

Frame of the currently active camera. |

Connecting your robot’s tf tree

If you wish to connect your robot’s tf tree with Pickit’s tf tree, you have to connect it to either pickit/robot_base or pickit/robot_ee, depending on the chosen camera mount.

Fixed camera mount

In this case the camera is rigidly mounted with respect to the robot’s base frame. Hence, you have to connect your robot’s base frame with pickit/robot_base such that the tf tree looks as shown below (simplified):

robot_base ───────► pickit/robot_base

│ │

┌───────┘ └──────┐

▼ ▼

pickit/robot_ee camera

You can achieve this by broadcasting an identity transform between your robot’s base frame (e.g. robot_base) and pickit/robot_base. When done in a ROS launch file, this could look like the following snippet:

<node name="static_tf_brdc_pickit_robot" type="static_transform_publisher"

args="0 0 0 0 0 0 robot_base pickit/robot_base" pkg="tf2_ros" />

Camera on robot

This is similar to he camera fixed case, with the addition that you also have to publish an identity transform between your robot’s flange frame and pickit/robot_ee.

robot_base ─────► pickit/robot_base

│

▼

robot_flange ─────► pickit/robot_ee

│

▼

camera

Note

It is currently not possible to disable the broadcasting of the tf transform between pickit/robot_base and pickit/robot_ee. This will cause tf loops if you connect both frames with your corresponding robot frames. Please contact us, if this is a requirement for your application.

A possible workaround for the tf loop issue would be to run a ROS node that filters the /tf topic by removing the above mentioned transform. The filtered result could then be published to another topic e.g. /tf_filtered. You would then have to remap from /tf to /tf_filtered for all your nodes (that listen to tf) e.g. like this:

rosrun rviz rviz /tf:=/tf_filtered

The following script shows an example of how to filter a /tf topic:

#!/usr/bin/env python

import rospy

import tf.msg

tf_pub = None

def tf_message_cb(msg):

global tf_pub

msg.transforms = filter(lambda x: x.child_frame_id != "pickit/robot_ee" and

x.header.frame_id != "pickit/robot_base",

msg.transforms)

tf_pub.publish(msg)

if __name__ == '__main__':

rospy.init_node("tf_filter")

tf_pub = rospy.Publisher('/tf_filtered', tf.msg.tfMessage, queue_size=10)

tf_sub = rospy.Subscriber('/tf', tf.msg.tfMessage, tf_message_cb)

rospy.spin()

Advanced topics

Publishing the robot pose

When using the Pickit ROS interface, Pickit requires the robot pose to be published on the /pickit/ros_interface/robot_pose ROS topic. Robot pose in this context is the transform between the robot base frame and the robot flange (without any tool attached).

If you can lookup the above transform of your robot via tf, you can use the Python script below to continuously publish the robot pose to the mentioned ROS topic.

#!/usr/bin/env python

import rospy

import tf2_ros

import geometry_msgs.msg

if __name__ == "__main__":

rospy.init_node('robot_pose_pub')

tfBuffer = tf2_ros.Buffer()

listener = tf2_ros.TransformListener(tfBuffer)

# Make sure you provide the correct frame ids of your robot via the

# parameter server.

tf_base_link = rospy.get_param("~tf_base_link", "pickit/robot_base")

tf_ee_link = rospy.get_param("~tf_ee_link", "pickit/robot_ee")

publish_rate = rospy.get_param("~publish_rate", 10.0)

base_to_ee_pub = rospy.Publisher("/pickit/ros_interface/robot_pose",

geometry_msgs.msg.TransformStamped,

queue_size=10)

rate = rospy.Rate(publish_rate)

while not rospy.is_shutdown():

t = rospy.Time(0)

try:

trans_stamped = tfBuffer.lookup_transform(tf_base_link,

tf_ee_link, t)

except (tf2_ros.LookupException, tf2_ros.ConnectivityException,

tf2_ros.ExtrapolationException):

rate.sleep()

continue

trans_stamped.header.frame_id = "pickit/robot_base"

trans_stamped.child_frame_id = "pickit/robot_ee"

base_to_ee_pub.publish(trans_stamped)

rate.sleep()

Pick and place example

The following example implements the logic described in the simple pick and place program.

Detections are performed asynchronously, so the robot can move while waiting for detection results.

Furthermore, object poses are transformed into the robot base frame for easier usage with other libraries e.g. MoveIt.

This example program is part of the im_pickit_msgs package which is available in our downloads page.

#!/usr/bin/env python

import rospy

import tf2_geometry_msgs

import tf2_ros

from geometry_msgs.msg import PoseStamped, Pose, Point

from im_pickit_msgs.msg import ObjectArray

from im_pickit_msgs.srv import LoadConfig, LoadConfigRequest, CaptureImage, ProcessImage

from multiprocessing.pool import ThreadPool

def trigger_detection(thread_pool):

"""Captures an image and triggers image processing."""

response = capture_image_srv()

assert (response.status == response.STATUS_SUCCESS), "Failed to capture image."

# Trigger non-blocking image processing, so the robot can move while

# the captured image is being processed.

return thread_pool.apply_async(process_image_srv)

def collect_detection_results(detection_handle):

"""Waits for detection to finish and returns the results."""

response = detection_handle.get()

return response.objects

def retrieve_first_object_pose(detection_result):

"""Retrieves the first object pose and transforms it to the pickit/robot_base frame."""

first_object = detection_result.objects[0]

object_pick_pose = first_object.object_pick_tf

pickit_ref_T_object = PoseStamped(

header=object_pick_pose.header,

pose=Pose(

position=Point(

x=object_pick_pose.transform.translation.x,

y=object_pick_pose.transform.translation.y,

z=object_pick_pose.transform.translation.z),

orientation=object_pick_pose.transform.rotation

)

)

# Transform object pose from pickit/reference frame to pickit/robot_base frame.

robot_base_T_object = tf_buffer.transform(pickit_ref_T_object, "pickit/robot_base")

return robot_base_T_object

def pick(object_pose):

"""Pick the object with the robot."""

pass

def goto_detection():

"""Move robot in detection position."""

pass

def place():

"""Place picked object."""

pass

if __name__ == "__main__":

"""

The following example implements the logic described in the simple pick and place program.

Detections are performed asynchronously, so the robot can move while waiting for detection

results. Furthermore, object poses are transformed into the robot base frame for easier

usage with other libraries e.g. MoveIt.

"""

rospy.init_node("pickit_client")

tf_buffer = tf2_ros.Buffer()

tf_listener = tf2_ros.TransformListener(tf_buffer)

thread_pool = ThreadPool(processes=1)

# Initialize ROS services.

load_product_srv = rospy.ServiceProxy("/pickit/configuration/product/load", LoadConfig)

load_setup_srv = rospy.ServiceProxy("/pickit/configuration/setup/load", LoadConfig)

capture_image_srv = rospy.ServiceProxy("/pickit/capture_image", CaptureImage)

process_image_srv = rospy.ServiceProxy("/pickit/process_image", ProcessImage)

load_product_srv.wait_for_service()

load_setup_srv.wait_for_service()

capture_image_srv.wait_for_service()

process_image_srv.wait_for_service()

# Load initial configuration by their product & setup name.

product_config = "product_name" # Replace with actual product name.

setup_config = "setup_name" # Replace with actual setup name.

response = load_product_srv(method=LoadConfigRequest.METHOD_LOAD_BY_CONFIG_NAME,

config=product_config, set_persistent=True)

assert response.success, "Failed to load product config."

response = load_setup_srv(method=LoadConfigRequest.METHOD_LOAD_BY_CONFIG_NAME,

config=setup_config, set_persistent=True)

assert response.success, "Failed to load setup config '{}'.".format(setup_config)

# Trigger detection and wait for detection result.

goto_detection()

detection_handle = trigger_detection(thread_pool)

detection_result = collect_detection_results(detection_handle)

# Main loop: Pick and place the first detected object until no more object is detected.

while True:

if detection_result.status != ObjectArray.STATUS_SUCCESS \

or not detection_result.n_valid_objects:

rospy.loginfo("No objects found.")

break

rospy.loginfo("Detected {} objects".format(detection_result.n_valid_objects))

object_pose = retrieve_first_object_pose(detection_result)

pick(object_pose)

goto_detection()

detection_handle = trigger_detection(thread_pool)

place()

detection_result = collect_detection_results(detection_handle)