Warning

You are reading the documentation for an older Pickit release (3.1). Documentation for the latest release (4.0) can be found here.

How to have faster cycle times?

For a vision-based application to be a success, parts not only need to be detected and picked reliably, but cycle time requirements must also be satisfied. These requirements can be the result of technical specifications, such as the expected feed rate in a machine tending application. They can also result from business constraints, such as the expected return of investment time for the robot cell.

Cycle time refers to the time spent performing a full cycle of the application, such as a full pick and place sequence. This article focuses on how to optimize the cycle time of an application that is already picking reliably. It focuses on these main strategies to reduce cycle time:

Mask object detection time with robot motions

Probably the most significant (and easy) cycle time optimization for a vision-guided picking application is to eliminate or minimize the amount of time a robot is idle, waiting for detection results to be ready. Ideally, the robot should be busy at all times, picking or placing parts.

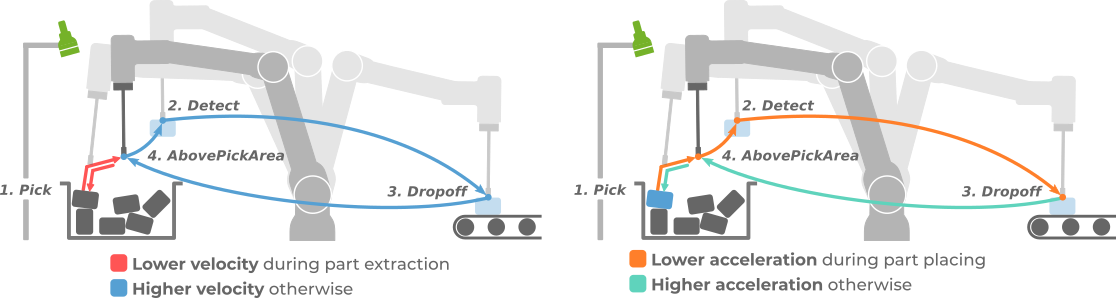

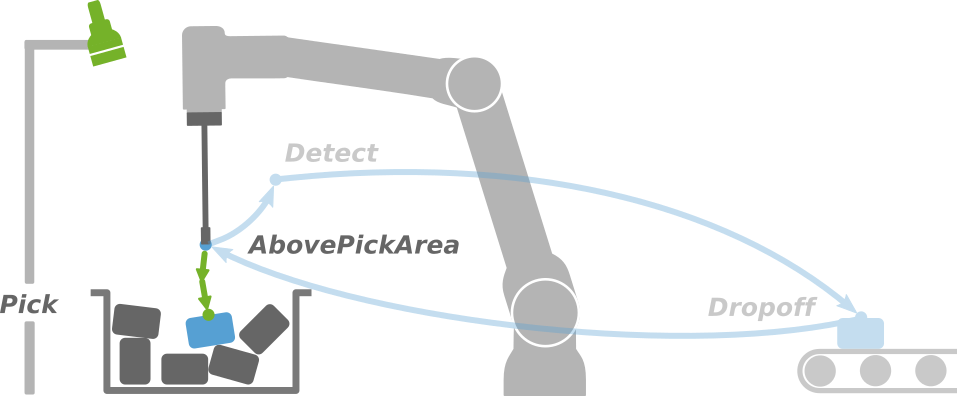

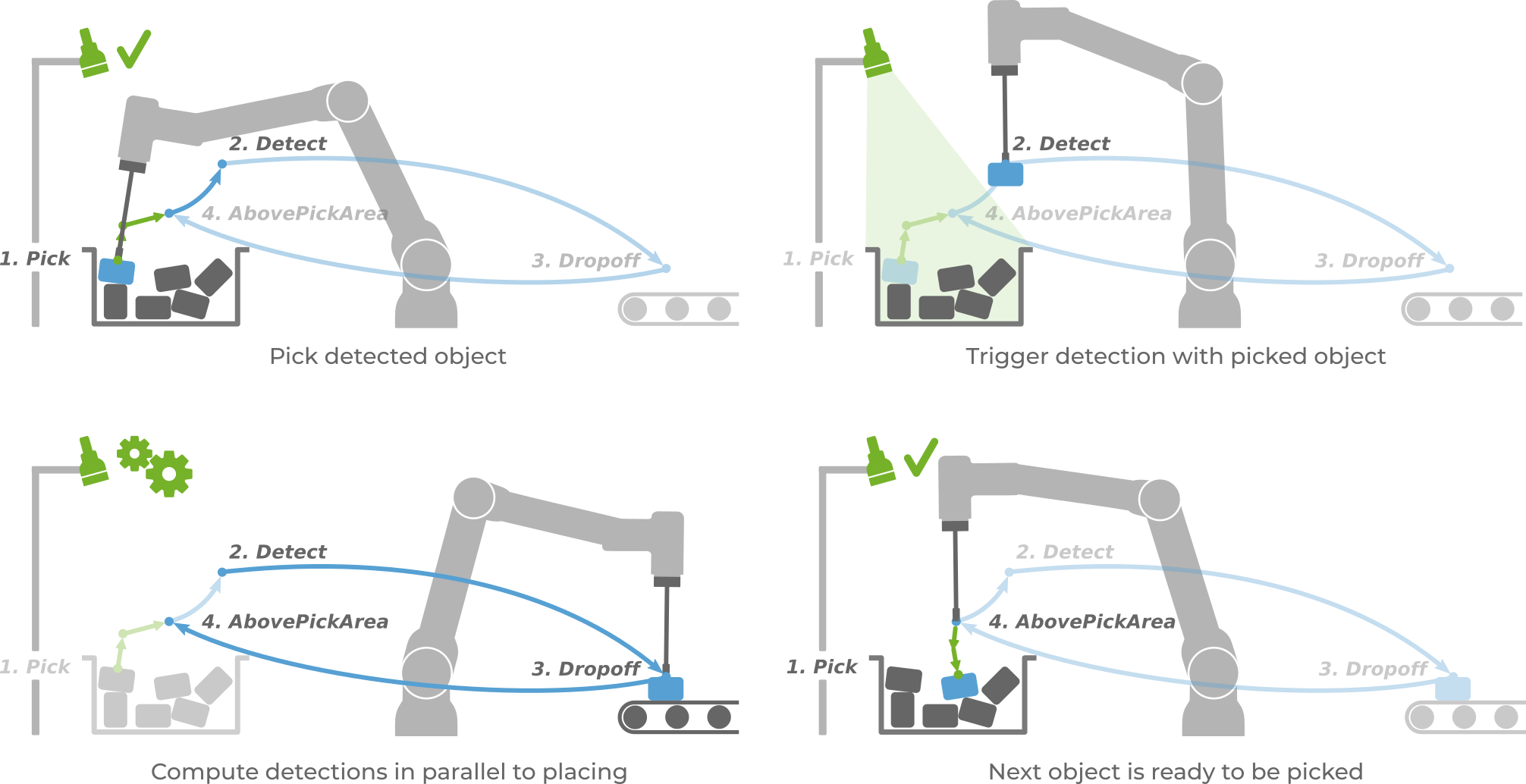

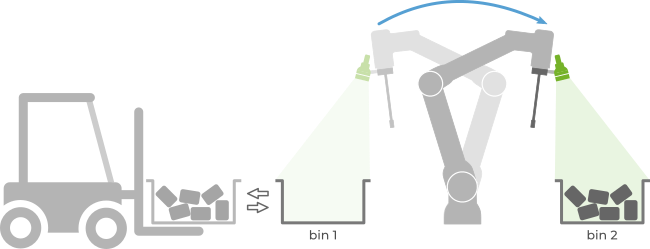

To this end, the idea is to perform object detection in parallel to the placing motion:

Go to the pick point produced by the previous detection run (below, top left).

Pick the part, go to the Detect point and trigger a new detection (below, top right). Learn more about what constitutes a good detection point and camera location here.

Perform the placing motion in parallel to object detection (below, bottom left). Typically, object detection completes before the placing motion, fully masking the detection time. Learn more about how to have faster detections here.

The next part to be picked is ready to be sent to the robot (below, bottom right).

The robot-independent pick and place program describes how to write a program that masks object detection time. Additionally, example picking programs shipped by Pickit robot integrations have this optimization already built-in.

Robot-mounted cameras

When the camera is mounted on the robot, detection time cannot be fully masked by robot motions. Object detection consists of two steps, image capture and image processing. During image capture, the robot must remain stationary in the Detect point, so only the image processing time can be masked with robot motions. Learn more on optimizing image capture time here, and how this affects the robot program logic here. Also, read the next section on picking multiple objects per detection run for an additional optimization that applies to some applications.

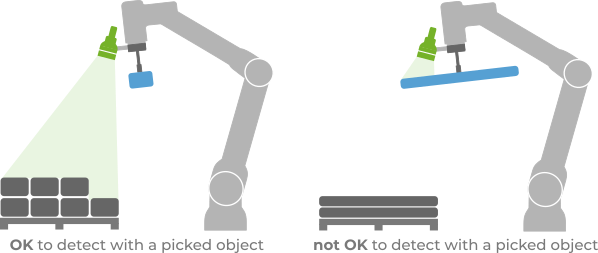

Note that, to mask detection time, image capture needs to be performed with a picked object. This means that the camera view should not be blocked by the picked object for the optimization to be applicable.

Picking multiple objects per detection run

There are two situations in which detection time cannot be fully masked with robot motions:

The camera is robot-mounted, and the image capture time is not masked.

The placing motion is very short, and ends up being faster than the object detection time.

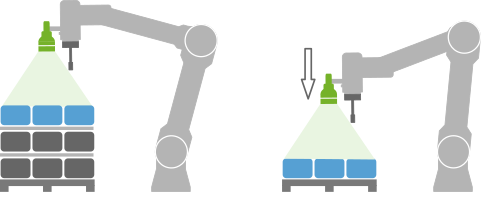

One way to reduce this impact to a fraction is to pick more than one object per detection run. This is only applicable to situations where picking one part doesn’t disturb much the location of neighboring parts. This is the case, for example, when parts are presented in a semi-structured way, such as in depalletizing, where the robot can pick all detections in a layer.

In the below example, if there are three parts per layer and Pickit finds them all, the unmasked image capture time is spread over three picks instead of just one.

The pickit_next_object() function should be used in your robot program to request the next detected object from Pickit without triggering a new detection.

Tip

Picking multiple objects per detection run is applicable beyond semi-structured part layouts.

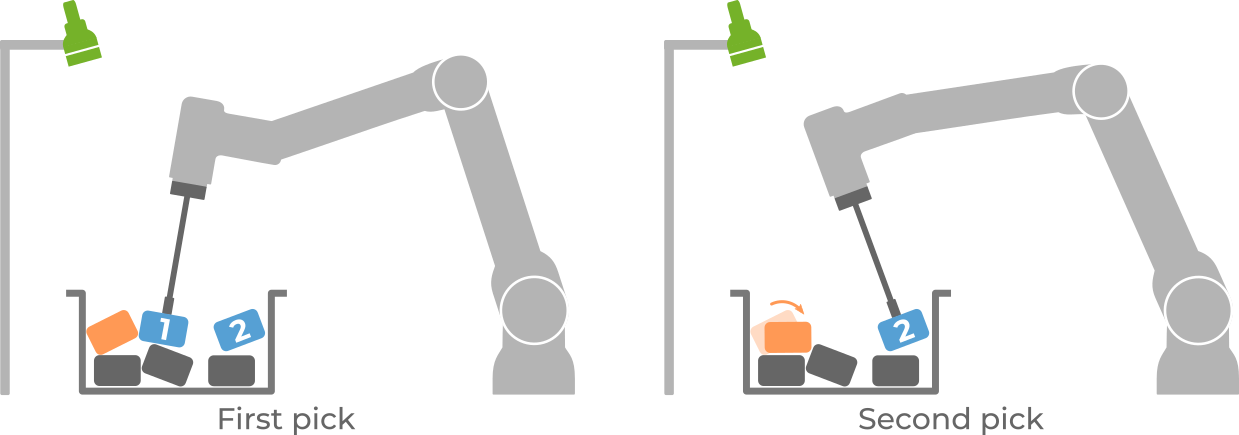

If a bin picking application is such that the disturbance of picking a part is localized to its immediate vicinity, you can enable the Objects distance filter, which only allows picking objects that are at least a certain distance apart.

In the example below, the orange object is detected, but is marked as unpickable for being too close to object 1, as its location can be disturbed when 1 is picked.

This filter is an advanced setting, and needs to be enabled by going to Settings > Advanced settings > Enable advanced picking settings.

It shows up in the Pick strategy section of the Picking page.

Mask part feeding time with picking

In some applications, feeding new parts to pick is a process that can take a non-negligible amount of time, such as when an empty bin or pallet needs to be replaced. To not interrupt part picking during the feeding process, it is recommended to use multiple picking stations.

Minimize the time spent in robot motions

This section covers a number of general recommendations that are independent of the usage of a vision system, as well as a few ways in which Pickit can explicitly contribute to faster robot motions.

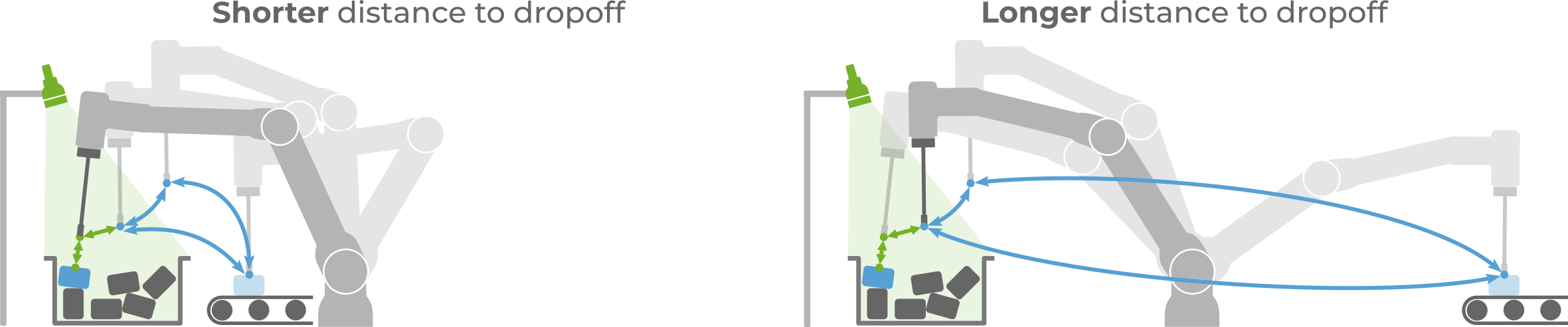

Minimize path lengths

A proper placement of the elements in your robot cell can reduce the distances travelled by the robot tool, hence the time it takes to traverse them. This includes the location of the robot, the bin or pallet, the dropoff location, and intermediate stations (if applicable). By “location” we refer not only to the position in the ground, but also to consider using raisers for the robot and/or bin, if required.