Warning

You are reading the documentation for an older Pickit release (3.3). Documentation for the latest release (4.0) can be found here.

Surface treatment and dispensing

Overview

In surface treatment and dispensing applications such as sanding, sealing or deburring, a robot needs to follow a trajectory relative to a part. Oftentimes, when a part arrives at the robot cell, its location is not fully known, so two important concerns need to be addressed:

On the one hand, it should be possible to teach the trajectory relative to the part, such that if the part location changes, the trajectory moves along, as if fixed to the part. Many robot programming languages support this natively using the concept of a user frame.

On the other hand, the part location should be known with enough accuracy. This is the role of Pickit.

This article describes the logic behind the user frame robot program needed in a surface treatment and dispensing application.

Application example: forklift sanding

Doosan Industrial Vehicle utilizes Pickit for a polishing robot (Yaskawa) to detect a vehicle counterweight on a conveyor. The system is used to produce 60 parts per day, out of which 41 are unique in shape.

This application utilizes the concept of a user frame, which will be explained in the following section. Using this concept, the robot motion can be adjusted so that the robot manipulates the part in the same way as programmed even if the position of the part is different from the one set when the robot program was taught.

The concept of user frame

A user frame is a user-defined coordinate system in a known location with respect to a part. When a robot task needs to follow a trajectory relative to the part, its waypoints can be defined with respect to the user frame. This way, if the part location changes and the new user frame location is known, the trajectory waypoints remain valid.

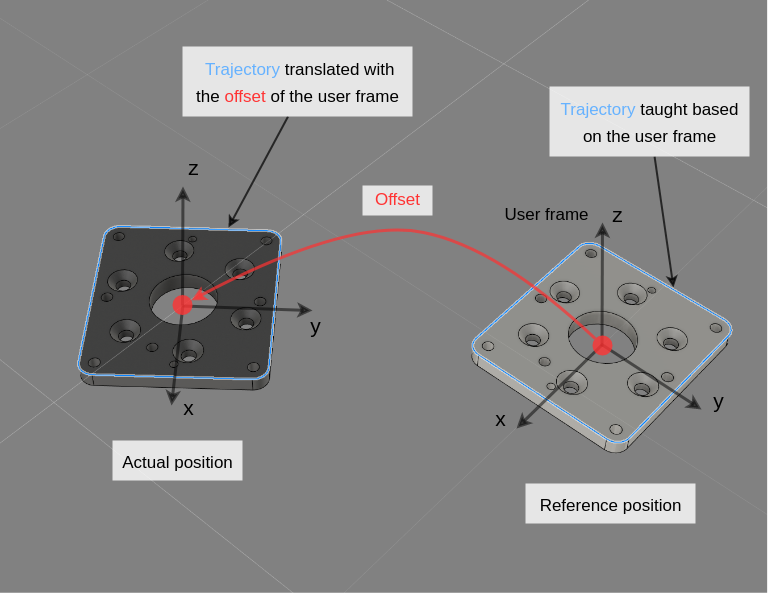

In the picture below, a user frame is defined in the center of the part at its reference location, and a trajectory is taught with respect to that user frame. When the part is moved by a certain offset, the user frame can be updated using a Pickit detection. As a result, the trajectory will also shift to the actual position of the part.

Generic user frame program logic

This section describes the logic for a simple user frame program. It is divided into three parts:

Trajectory teaching logic, that is used to define the user frame and the trajectory to be followed with respect to that user frame. This is ran once, when setting up the application.

Trajectory execution logic, that is used to follow the taught trajectory for every detected object. This is what is run when the application is in production.

Application-specific hooks, containing the parts of the above logic that are specific to each application.

The following logic has the purpose of presenting the basic ideas for writing a good user frame robot program. It is written in robot-independent pseudo-code using a syntax similar to that of Python and can be downloaded here.

Note

The code samples presented in this article are pseudo-code, and are not meant to be executed.

The minimum inputs required to run the user frame program are:

Pickit configuration: setup and product IDs to use for object detection.

Waypoints: These points are defined globally to be used both in the Trajectory teaching logic and the Trajectory execution logic.

Home: Start point of the application.Detect: Where to perform object detection from. Refer to this article for guidelines on how to make a good choice.Point1,Point2,Point3…PointN: N Points defined by the user to perform the desired application. These points are defined relative to the PikitUserFrame.

Robot tool actions: set by the user and is related to the application.

Trajectory teaching logic

During setup, a Pickit detection is called. If the detection is successful, the user frame PickitUserFrame can be set to the recieved pick point PickitPick. Based on that user frame, the desired trajectory points Point1,Point2,…,PointN can be defined in two ways:

In manual mode, by jogging the robot TCP to every point and then saving the robot position with respect to the user frame using the robot pendant.

In offline mode, using robot-specific software, based on the CAD model of the part.

Note

In the trajectory teaching phase, it is important to teach the user frame based on a Pickit detection. This will ensure a correct link between the Pickit detection result and the user frame for every new part.

1if not pickit_is_running():

2 print("Pickit is not in robot mode. Please enable it in the web interface.")

3 halt()

4

5before_start()

6pickit_configure(setup, product)

7retries = 5 # Number of detection retries before bailing out.

8

9goto_detection()

10pickit_find_objects_with_retries(retries)

11pickit_get_result()

12

13if pickit_object_found():

14

15 # Define user frame based on pick point from first detection.

16 PickitUserFrame = PickitPick

17

18 # Trajectory points for your application, expressed in the PickitUserFrame.

19 # Replace with actual values.

20 global Point1 = [x,y,z,rx,ry,rz]

21 global Point2 = [x,y,z,rx,ry,rz]

22 global Point3 = [x,y,z,rx,ry,rz]

23 ...

24 global PointN = [x,y,z,rx,ry,rz]

25

26elif pickit_empty_roi():

27 print("The ROI is empty.")

28

29elif pickit_no_image_captured():

30 print("Failed to capture a camera image.")

31

32else:

33 print("The ROI is not empty, but the requested object was not found or is unreachable.")

34

35after_end()

Trajectory execution logic

This logic is called for every object that arrives to the robot cell.

1if not pickit_is_running():

2 print("Pickit is not in robot mode. Please enable it in the web interface.")

3 halt()

4

5before_start()

6pickit_configure(setup, product)

7retries = 5 # Number of detection retries before bailing out.

8

9# Find the object to perform the application

10goto_detection()

11pickit_find_objects_with_retries(retries)

12pickit_get_result()

13

14if pickit_object_found():

15

16 # Update user frame based on the pick point sent from pickit.

17 PickitUserFrame = PickitPick

18

19 if (pickit_is_reachable(Point1)

20 and pickit_is_reachable(Point2)

21 and pickit_is_reachable(Point3)

22 # ...

23 and pickit_is_reachable(PointN)) :

24 # This is the application to be performed on the detected object.

25 follow_trajectory()

26

27 else:

28 print("Object is unreachable by the robot")

29

30elif pickit_empty_roi():

31 print("The ROI is empty.")

32

33elif pickit_no_image_captured():

34 print("Failed to capture a camera image.")

35

36else:

37 print("The ROI is not empty, but the requested object was not found or is unreachable.")

38

39after_end()

The following is a breakdown of the user frame program application logic. Click on the entries below to expand them and learn more:

Lines 1-7: Initialization

1if not pickit_is_running():

2 print("Pickit is not in robot mode. Please enable it in the web interface.")

3 halt()

4

5before_start()

6pickit_configure(setup, product)

7retries = 5 # Number of detection retries before bailing out.

These commands are run once before starting the application. They take care of:

Checking if Pickit is in robot mode, and bail out if not. Pickit only accepts robot requests when in robot mode.

Calling the before_start() hook, which contains application-specific initialization logic.

Loading the Pickit configuration (setup and product) to use for object detection.

Lines 9-38: Application

9# Find the object to perform the application

10goto_detection()

11pickit_find_objects_with_retries(retries)

12pickit_get_result()

13

14if pickit_object_found():

15

16 # Update user frame based on the pick point sent from pickit.

17 PickitUserFrame = PickitPick

18

19 if (pickit_is_reachable(Point1)

20 and pickit_is_reachable(Point2)

21 and pickit_is_reachable(Point3)

22 # ...

23 and pickit_is_reachable(PointN)) :

24 # This is the application to be performed on the detected object.

25 follow_trajectory()

26

27 else:

28 print("Object is unreachable by the robot")

29

30elif pickit_empty_roi():

31 print("The ROI is empty.")

32

33elif pickit_no_image_captured():

34 print("Failed to capture a camera image.")

35

36else:

37 print("The ROI is not empty, but the requested object was not found or is unreachable.")

38

First, a detection is triggered to locate the object. If the object is detected and is reachable, the application can be performed.

The reachability check is done using pickit_is_reachable(Point).

The following cases are handled:

There are no pickable objects: cannot perform the application, as either the object is not detected or it is unreachable.

The object is detected and the trajectory is reachable: execute the application.

The object is detected but not reachable: Cannot perform the application.

Line 38: Termination

39after_end()

The after_end() hook is called last, which contains application-specific termination logic.

Application-specific hooks

The contents of these hooks are typically specific to a particular application, as they contain motion sequences and logic that depend on the robot cell layout, including:

The robot type and location

The camera mount and location

The part to detect

The desired trajectory

The used tool

The following implementations are a good starting point for a generic surface treatment and dispensing application, but it’s necessary to adapt them to some extent by adding waypoints or extra logic.

40

41# Actions performed before starting the application.

42def before_start():

43 movej(Home)

44

45# Move the robot to the point from which object detection is triggered.

46def goto_detection():

47 movej(Detect)

48

49# Action performed during the trajectory following.

50def enable_tool():

51 # Add logic for enabling tool.

52

53def disable_tool():

54 # Add logic for disabling tool.

55

56def follow_trajectory():

57 # Start the application by enabling the tool if needed.

58 enable_tool()

59

60 # Move to taught Points w.r.t selected user frame.

61 movel(Point1, Userframe = PickitUserFrame)

62 movel(Point2, Userframe = PickitUserFrame)

63 movel(Point3, Userframe = PickitUserFrame)

64 ...

65 movel(PointN, Userframe = PickitUserFrame)

66

67 disable_tool()

68

69# Actions performed after the application is done.

70def after_end():

71 movej(Home)

Robot brands

The following are implementations of the user frame program for specific robot brands.